Maven To Bazel

Our application has been in development for 25 years and consists of 2.2 MLOC Java and 340 KLOC JS/TS. This is divided into 600 Maven modules and takes around 5 minutes to compile and execute unit tests.

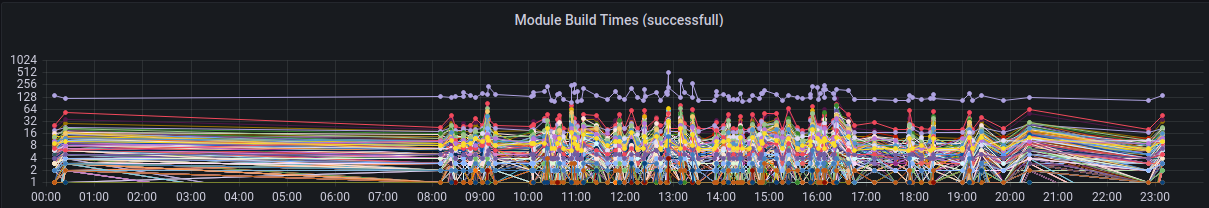

Looking at our build times we see that one module is not like the others: it’s our frontend module. It contains all of our JS/TS code and webpack to build. The axis is logarithmic and the frontend takes around 4 times as long as the next slower module.

We are always interested in improving our build times and this situation presented us with a very low hanging fruit. Our developers come in 3 flavors: backend, frontend and full stack. This means that there are commits that only change the backend or frontend.

So could we just cache the other part and reduce our build times for such commits drastically?

This post shows how we did that with Bazel and then continued to move more parts to Bazel. We did this incrementally and used code generation to ensure that both builds do the same thing and we only have to maintain one of them.

Why not Maven?

Our plan is quite simple: split our application in 3 parts.

The frontend part only contains JS/TS and CSS. Backend consists of Java, while the glue part depends on frontend and backend. The bulk of our build time is spent in frontend and backend. Building glue is quite fast.

If we have a commit that only changes the frontend, we’d like to take backend from a previous build and only build frontend and glue.

With some scripting we could determine if only frontend has changed.

Then we could use Mavens --projects flag to control the content of the reactor.

Dependencies not in the reactor are taken from the local/remote repository.

We would have to ensure that the correct version of backend is available in the repository.

Mavens SNAPSHOT versions aren’t suitable for this, we would always use the most recent SNAPSHOT for our backend modules.

We would need concrete versions to identify these artifacts correctly. Then we could rewrite the pom files with these concrete versions and Maven would do the right thing.

As a version we could use a hash of the inputs to our modules.

This seems possible, but we would have to write some custom code.

We can still to the building with Maven, but move the management of the local repository to another tool.

Using Bazel to Manage Maven Repositories

Bazel is a build tool from Google. It uses hashes to identify if something has to be rebuilt. For each action that Bazel performs it records the hashes of all inputs to this action and the hash of its output. If the input hashes stay the same between two builds the output can be reused.

It also has support for using remote caches to share outputs between builds on different machines. That sounds exactly like what we are looking for.

In order to use Bazel for our build we have to calculate some things:

- Which Maven modules are in frontend, backend and glue?

- What are the inputs for our 3 parts?

Sorting Modules into Frontend, Backend and Glue

We can start with frontend because it’s easy. It’s just a single Maven module.

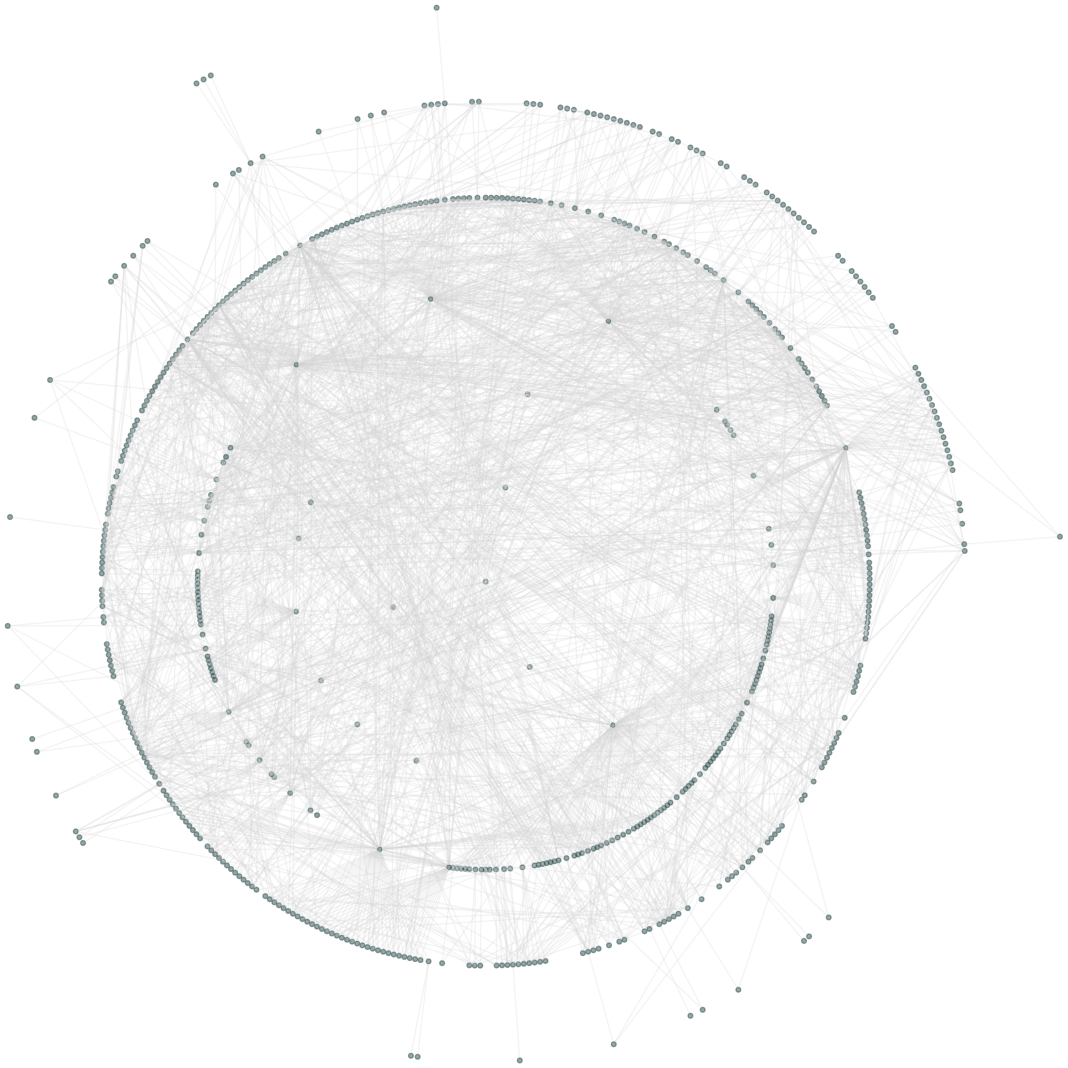

For glue and backend we have to analyze our module graph.

The module where we depend on backend and frontend is called web-application.

We write a small program that parses the pom files and creates our module graph. Once we have the module graph we can answer the question, which modules belong to backend and glue:

Set<Module> backend = moduleGraph.transitiveClosure("web-application");

backend.remove("frontend");

Set<Module> glue = moduleGraph.nodes();

glue.remove("frontend");

glue.removeAll(backend);

With the information, which modules belong to which part we can tackle the next step. What are the inputs?

Determining Inputs for Frontend, Backend and Glue

Each of our three parts is built by Maven. The first thing we need are the Maven plugins we use during our build. In the same step we can also get our external Maven dependencies.

Bazel builds should be hermetic. To get our plugins and dependencies via the network we need a repository rule.

In order to get our plugins and external dependencies one might think that dependency:go-offline got us covered.

But sadly it won’t work.

You can try it by running:

mvn dependency:go-offline -Dmaven.repo.local=/tmp/m2

mvn verify -Dmaven.repo.local=/tmp/m2

There’s an open issue describing the problem. The short version is that plugin dependencies are not correctly brought offline and will fail your build.

We just executed mvn verify -Dmaven.repo.local=/tmp/m2 to populate our repository with plugins and external dependencies.

After executing Maven our repository rule sets the download date in _remote.repositories files to a fixed value to enable reproducibility.

For determining the other inputs to our build we start with frontend.

It’s a Maven module where we only use Maven to run npm and webpack.

The module should be rebuilt, when either external dependencies or sources change.

We can use package-lock.json and src/** as inputs.

The output of our frontend is a zip file containing all JS bundles.

Let’s continue with backend. As frontend it does not have project internal dependencies. So the information we need is:

- Which modules are built?

- Where are the located on disk?

- What are their maven coordinates?

We pass this information to our custom rule mvn_multi_module:

mvn_multi_module(

name = "backend",

modules = [

"Module_A:org.example:module-a",

"Module_B:org.example:module-b",

],

srcs = glob(["Module_A/**"], ["Module_A/target/**"])

+ glob(["Module_B/**"], ["Module_B/target/**"]),

deps = ["@mvn-external//:deps"],

)

Modules is a list of strings.

We encode the location on disk (Module_A), the group ID (org.example) and the artifact ID (module-a) as : separated strings.

After the artifact ID there can be multiple classifiers like test-jar, if a module builds more than just a jar.

In srcs we use the glob function to find our inputs, ignoring the target directory from Maven.

We could derive this from modules, but when I implemented this I did not know I could use macros to do this.

Last we have deps.

For backend we only need our external dependencies @mvn-external//:deps.

For glue we would also list the modules compiled in backend.

Now that we have defined the inputs and dependencies of our 3 part build we can start building.

Building Multiple Modules

Our Bazel build file generator creates the rule invocations for mvn_multi_module, but how does it work?

def _mvn_multi_module_impl(ctx):

parsed_modules = [_parse(ctx, m) for m in ctx.attr.modules]

inputs = []

inputs.extend(ctx.files.srcs)

inputs.extend(ctx.files.deps)

outputs = [_output(ctx, p) for p in parsed_modules]

commands = _link_commands(ctx.attr.deps)

module_list = _module_list(parsed_modules)

commands.append("mvn verify -pl %s" % module_list)

out_files = [f for f in o.out_files for o in outputs]

ctx.actions.run_shell(

inputs = inputs,

outputs = out_files,

command = " && \n".join(commands),

)

return [

DefaultInfo(files = depset(out_files)),

LocalRepoArtifactInfo(files = depset(_local_files(outputs)))

]

First we have to parse our : separated module definitions.

Then we have to take our declared inputs srcs and deps and add them to a list.

We’ll need those later to tell Bazel which inputs are used to execute Maven.

By defining this Bazel will automatically rebuild, when those inputs change and use a cached version otherwise.

With our parsed module information we can generate some structured information for each module.

This contains all built artifacts like jars, test-jars and so on.

But also the pom file for each module and a maven-metadata.xml.

The action to generate the pom and metadata files is elided for brevity.

With those things we have everything in place to move the artifacts into our Bazel managed Maven repository.

The function _link_commands generates some shell commands to link our dependencies into our local Maven repository structure.

Bazel uses a sandbox for each action and we have to make all our dependencies available in this sandbox.

Before we can add the command to invoke Maven we have to generate the module_list to tell Maven which modules belong to the reactor with the --projects parameter.

As a final step before calling Maven we extract the file objects from our outputs.

Now we can finally execute our link commands and call Maven with a run_shell action.

Now we wrap the outputs up into providers and return those from our rule.

With this in place we can build multiple modules with Maven invoked by Bazel. Bazel takes care of the correct caching of artifacts.

Progress so Far

With this in place we can use Maven and cache parts of our artifacts in a reliable way.

Have we achieved our goal? Well, kinda… This works nicely on my machine. But on CI we have to get our external dependencies each build. If you remember from the beginning, we do this by building the whole application, which takes some time. The cause for this is that Bazel currently can’t use a remote cache for repository rules (see issue).

In hindsight we should just have used some persistent workers for CI so that the repository rule results could have been taken from the local cache.

Instead we just eliminated Maven from the build (almost).

Using Bazel to Build Almost all of our Modules

Let’s talk about the “almost” part first and get it out of the way. It’s our frontend. There we use Maven to just invoke the JS build tooling (webpack). We could port this to Bazel, but that’s a significant undertaking and something for a future phase of this project.

When building frontend we just use frontend-maven-plugin to invoke JS based tools. We don’t have any external Maven dependencies. With this we only need some plugins in our local repository to build frontend. These don’t change often, so we pack them manually into a zip file, that Bazel can download and use. This zip has to be rebuild, when updating plugins, but that does not happen very often.

We can still use our mvn_multi_module rule to build frontend, and can concentrate on building the Java parts glue and backend without Maven.

Building Cadenza with Bazel

Our Maven build just compiles some Java, runs tests and uses some Maven plugins for code generation and related tasks. Compiling and running Java tests is easy with Bazel. We can just use rules_java for this.

So the only obstacle are the various Maven plugins. We need some equivalent in Bazel for those.

First we check what we are using. We extended our pom parsing code to also parse the plugins sections. Then we can inspect all artifact IDs and get a feeling for what we have to port. Since we are only interested in our CI build we can safely ignore things only used in some profiles like asciidoctor.

With this list in hand we see that we mostly use code generators like antlr and xjc. Other plugins are one off executions of the ant plugin to delete some files and some other minor things.

Let’s concentrate on the code generators.

Porting Code Generators to Bazel

Bazel can easily call other programs to take over build steps.

You just have to implement a custom rule that uses a run action to call these tools.

Communication is done via command line arguments.

The tool should produce a known set of files as output.

So if your program produces a lot of various files, and you don’t want to specify them by hand, it’s best to produce a zip instead.

The plan is to take a look at each code generator Maven plugin, see how the Mojos are implemented and do the same with a command line interface instead. All of these plugins are implemented in Java. So it makes sense to also use Java for implementing the Bazel tools. In order to not pay JVM startup multiple times during our build we use persistent workers. This allows Bazel to reuse a single process for multiple actions. Instead of passing the parameters as command line arguments, Bazel will serialize the arguments into protobufs or json and communicate with the process over stdin/out. If we now put all code generators into the same tool, we pay JVM startup once.

This would be a bottle neck for our build. By default a persistent worker only supports one action simultaneously. But we can just make it a multiplex worker instead.

The worker example from Bazel give you a nice starting point for implementing your own worker.

We added each code generator to our custom Bazel worker and wrote rules to invoke them.

To take full advantage of Bazels caching, it’s important to take care of reproducibility. After implementing such a code generator it’s good to compare the execution logs. The diff will show you which files differ between to builds.

To further analyze differences I wholeheartedly recommend diffoscope. It easily diffs whole directories and also the contents of archives.

The most common sources of non reproducibility are timestamps in archives and comments in generated files with a generation timestamp. Some code generators offer options to disable these comments. Others need a post processing step to remove them.

Epilogue

We’ve taken care of code generators and other Maven plugins. We want to keep maintenance of the Bazel build to a minimum. Thus we keep our approach to parse the pom files and generate the Bazel build files from them.

In order to keep the two builds as similar as possible, we use a custom rule that basically mirrors the contents of a pom file.

I hope I could show a build migration does not have to be a multi month big bang project, but can be done step by step. I recommend to cut corners wherever possible. We did not write a tool to migrate every Maven build but only our own. This made some things kinda easy. Nonetheless there was a learning curve.

My personal opinion on Bazel is that it’s a great tool and the best build system I’ve worked with. For small projects it’s probably overkill, but it’s a good fit for large builds. It’s basically an opinionated toolbox on how to build software. If you accept those opinions, you are rewarded with a fast and correct build, like advertised. Even if you don’t plan on using it, I’d recommend to understand how it works. Bazel encodes a lot of good ideas.

Also the tooling around Bazel is top notch. From remote caching over remote execution to its build event log. It gets a lot of things right.

It has bugs and other flaws, like limited support for Java modules. Nonetheless I’d recommend it.